Limitations of SDN without Hardware Offload

With the transition toward network virtualization and software-defined networking (SDN), telecom operators have begun to realize that server CPUs alone are not enough.

As bandwidth requirements steadily increase, standard SDN running on CPU cores can no longer keep pace. Many networking and security functions are handled more efficiently by hardware. Encryption and decryption, for example, are tasks that are server CPU-intensive.

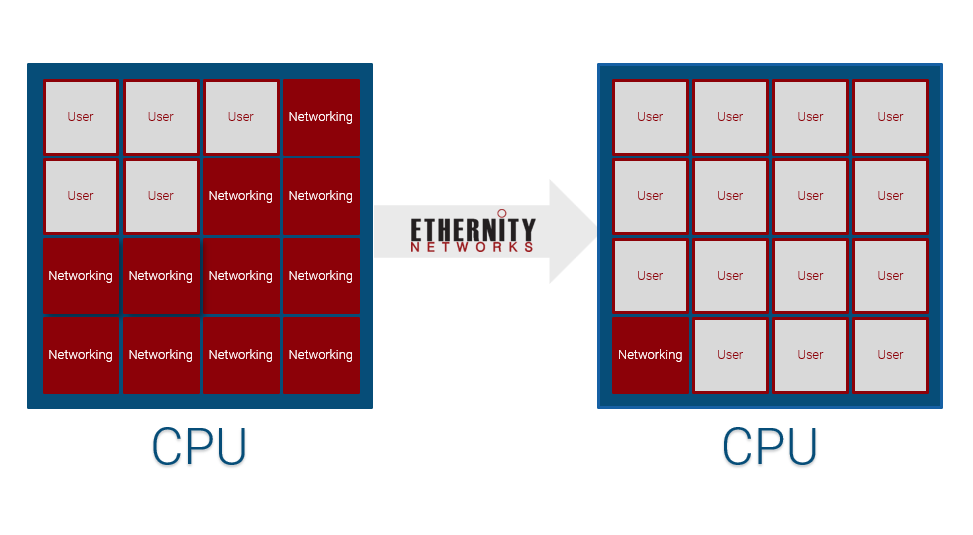

In cloud-based IaaS offerings, this cuts directly into profit margins. Cloud providers’ profits are directly tied to the number of virtual machines they can set up in one server. The more cores that are being used for the network data plane, the fewer that will be available to sell.

Running networking on software alone may have worked for 1GbE, but SDN struggles today to keep up with 40GbE, and it will need to support 100GbE and beyond in the years to come.

Processing networking data overburdens the server CPU, and multiple (or sometimes all) CPU cores are required to process common networking functions even at 10 Gbps of data traffic. At rates above 40 Gbps throughput, CPU performance suffers from high variability and poor predictability in throughput and latency.

This is driving telcos and cloud providers alike to examine hardware offloading to avoid burning CPU cores on networking and security functions.

Hardware Alternatives for SDN

When it comes to offloading the datapath to hardware, there are a few options, in order from least to most efficient:

-

Multicore CPU

-

GPU / NPU (networking processor unit)

-

FPGA (field-programmable gate array)

-

ASIC (application-specific integrated unit)